Python extract_valuable_HTML_from_WordPress_site_download.py mywebsite For example, if the downloaded website is in a folder mywebsite/, then put the Python program in the parent folder of mywebsite/ and run

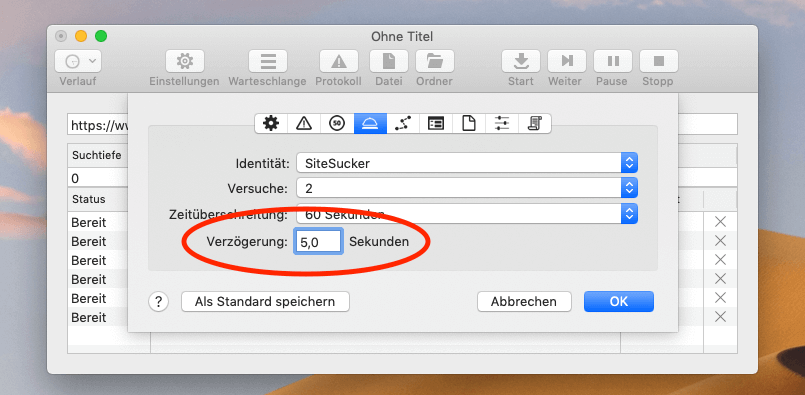

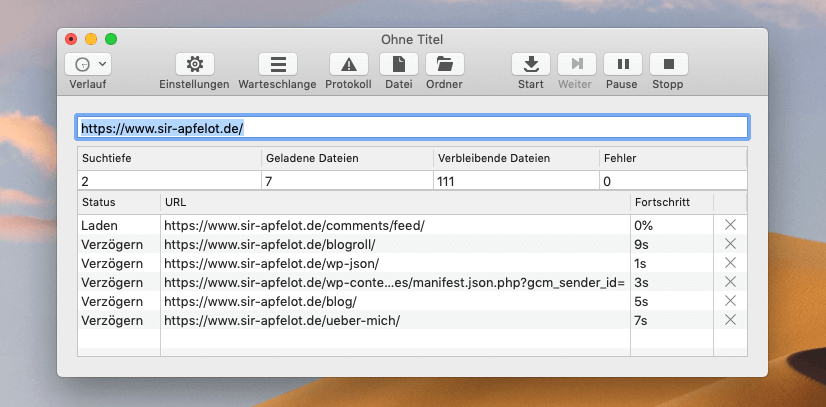

Second, run extract_valuable_HTML_from_WordPress_site_download.py (or a similar program of your own) on the website-download folder. First, download the website using a program like HTTrack or SiteSucker. My preferred way to back up a website is as follows. Gathering the website's text Method 1: Download website and extract relevant HTML In my opinion, if you back up a website on paper, you should print out the raw HTML of each page rather than copying and pasting text directly from the web browser, because copying and pasting text from a browser (in addition to not being scalable) loses hyperlinks, as well as HTML comments, custom JavaScript, and other information that you can only see in a page's markup. Of course, paper backups are more of a pain to use for recovery than are backups already in electronic format, but presumably paper backups could be scanned and read back into electronic text using OCR. So information stored on paper seems particularly resilient against civilizational-collapse scenarios. In addition, digital files require a computer and electricity in order to be read, while plain text on paper does not. Digital files may be vulnerable to format rot over time, and the hardware that stores digital files could break or fail to be supported in the future. However, digital data is also more brittle in several ways. Storing data digitally has many benefits over storing data on paper.

0 kommentar(er)

0 kommentar(er)